Yesterday, the President of IQS Research Shawn Herbig spent an hour on the radio discussing some of the intricacies involved […]

All posts tagged: Polling

City Research: Beyond the Political Polls, What Does Your Community Really Think?

Professional, targeted research is in a different league than political polling. The kind of institutional research that IQS Research does […]

What Makes a Statistically Valid Sample?

Most people have pretty limited understanding of statistics and research analytics, and they’d probably say they are thankful for that […]

“DEWEY DEFEATS TRUMAN” – A case study in trusting the untrustworthy

Last week, I posted a commentary on the dangers of trusting polls and research derived from samples of convenience. In […]

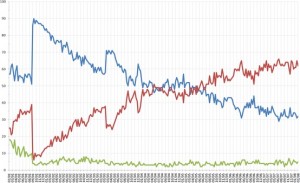

Polling: A double-edged sword

Let us pretend for a moment that we all understand the foundations of probability theory – because this is a […]

Search

Recent Posts

Tags

2012 Presidential Election (2)

Business (8)

college (3)

College attainment (4)

college preparedness (3)

college readiness (8)

college success (2)

Commentary (3)

Company (5)

Convenience Sampling (3)

Customer (3)

Customer satisfaction (3)

Customer service (2)

Data (13)

Data Gathering (5)

education (6)

educational statistics (3)

employee engagement (3)

employee satisfaction (2)

Employment (3)

Ethics (4)

How we think (15)

ICCHE (3)

Ideas (3)

IQS Research (13)

JCPS (3)

Market Research (14)

Methodologies (4)

Political Polls (2)

Polling (5)

qualitative research (4)

Random Sampling (5)

Research (25)

research methods (3)

Research on Research (9)

Research Reviews (3)

Sampling (5)

Semmelweis Reflex (2)

Shawn Herbig (4)

Social Media (3)

social research (5)

Statistics (14)

STATS-DC (3)

Surveys (3)

Text Analytics (3)